[ad_1]

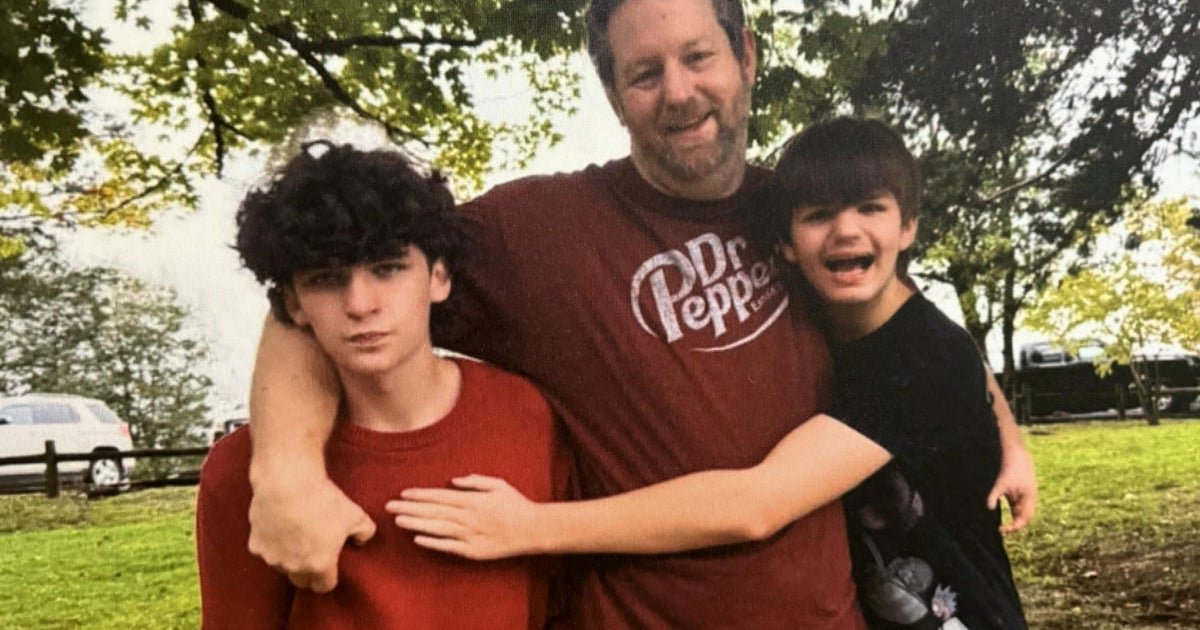

Elijah Heacock was a vibrant teen who made people smile. He “wasn’t depressed, he wasn’t sad, he wasn’t angry,” father John Burnett told CBS Saturday Morning.

But when Elijah received a threatening text with an A.I.-generated nude photo of himself demanding he pay $3,000 to keep it from being sent to friends and family, everything changed. He died by suicide shortly after receiving the message, CBS affiliate KFDA reported. Burnett and Elijah’s mother, Shannon Heacock, didn’t know what had happened until they found the messages on his phone.

Elijah was the victim of a sextortion scam, where bad actors target young people online and threaten to release explicit images of them. Scammers often ask for money or coerce their victims into performing harmful acts. Elijah’s parents said they had never even heard of the term until the investigation into his death.

“The people that are after our children are well organized,” Burnett said. “They are well financed, and they are relentless.They don’t need the photos to be real, they can generate whatever they want, and then they use it to blackmail the child.”

CBS Saturday Morning

The origins of sextortion scams

Reports of the scheme have skyrocketed: The National Center for Missing and Exploited Children said it received more than 500,000 reports of sextortion scams targeting minors in just the last year. At least 20 young people have taken their own lives because of sextortion scams since 2021, the Federal Bureau of Investigation estimates.

Teen boys have been specifically targeted, the NCMEC said in 2023, and with the rise in generative A.I. services, the images don’t even need to be real. More than 100,000 reports filed with the National Center for Missing and Exploited Children this year involved generative A.I., the organization said.

“You don’t actually need any technical skills at this point to create this kind of illegal and harmful material,” Dr. Rebecca Portnoff, the head of data science at Thorn, a non-profit focused on preventing child exploitation online, said. Just looking up how to make a nude image of someone will bring up search results for apps, websites and other resources, Portnoff said.

The crisis may seem overwhelming. But there are solutions, Portnoff said. Thorn has its own initiative, “Safety By Design,” which outlines barriers A.I. companies should set when developing their technology. Those barriers are designed to help reduce sextortion, Thorn said. A handful of major A.I. companies have agreed to the campaign principles, Thorn says.

“There are real, tangible solutions that do exist that are being deployed today that can help to prevent this kind of misuse,” Portnoff said.

Government entities are also working to fight sextortion. The recently-passed “Take It Down” Act, championed by Melania Trump and signed into law by President Trump, makes it a federal crime to post real and fake sexually explicit images of someone online without their consent. The law also requires social media companies and other websites to remove such images within 48 hours of a victim’s request.

Elijah’s parents said they never want other families to suffer like they have. They have fought for change, CBS affiliate WLKY reported. They said they hope the “Take It Down” Act will make a difference.

“It’s kind of like a bullet in a war. It’s not going to win the war,” Burnett said. “No war is ever won by one bullet. You got to win battles. You got to win fights. And we’re in it.”

[ad_2]